A new psychological phenomenon called “AI-psychosis” or “AI-reasoning” is causing concern among experts. In the United States, California psychiatrist Dr. Keith Sakata reported that twelve people had been hospitalised due to a loss of touch with reality after prolonged communication with chatbots such as ChatGPT.

Read also: AI is an assistant, not an author

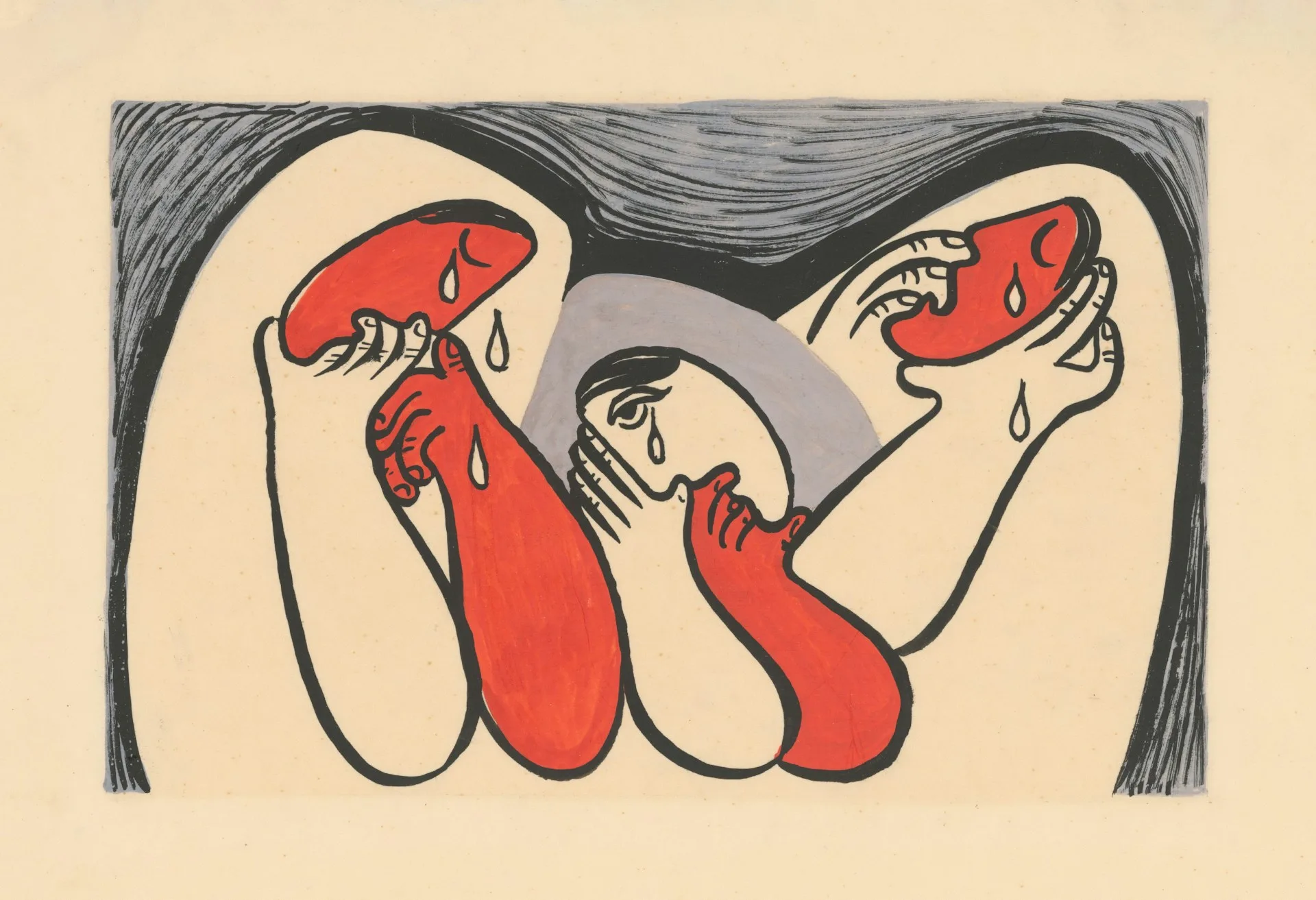

Soon, similar stories emerged about people experiencing obsessive thoughts and becoming convinced that they had created a magical mathematical method or gained access to secret knowledge. Such states are becoming more and more common, even in people who previously had no mental health issues. This phenomenon is described as a “mirror hallucination”: the AI model reflects the beliefs invented by the user, as if in an endless echo.

The need for terminology has become apparent: although “AI-psychosis” is not officially recognised, active discussion of it helps professionals and society to notice the problem and begin to respond to it.

At the same time, research conducted by a group of psychiatrists has identified several patterns: the “messianic mission,” where users believe they have discovered the truth; “god-like AI,” where the bot seems like an intelligent being; and “romantic addiction,” where people feel like the bot is their true love.

Read also: Ukraine has launched the world’s first national AI assistant for public services